pop AI first online workshop on AI in support of Civil Security: mapping functionalities and controversies

On 15th March 2022, pop AI project has organised its first online workshop (webinar) presenting the preliminary findings regarding AI functionalities in support of Civil Security and controversies mapping.

pop AI project is a 24-month Coordination and Support Action (CSA) funded by Horizon 2020 and coordinated by the National for Scientific Research Demokritos (NCSRD), based in Athens. The core vision of the project is to boost trust in AI by increasing awareness and current social engagement, consolidating distinct spheres of knowledge, and delivering a unified European view and recommendations, creating an ecosystem and the structural basis for a sustainable and inclusive European AI hub for Law Enforcement. Among key results, pop AI will provide the pandect of recommendations for the ethical use of AI by LEAs together with short-term foresight scenarios on AI ethics controversies and a long-term roadmap for 2040 with risks, mitigation and policy strategies to get there.

The objectives of the workshop were (i) to present and discuss the preliminary findings of the project (ii) discuss on AI scenario prioritisation, complementarity and synergies within the SU-AI cluster (ALIGNER, pop AI and STARLIGHT) and (iii) to provide entry to the platform for an inclusive stakeholders’ community engagement around the ethical development and application of AI in LEAs. The workshop included 50 attendees including 10 practitioners from Law Enforcement Authorities (20%) and a 40% participation from external experts: members of the pop AI Stakeholder Advisory Board, EC Officers, representatives from the sibling projects, ALIGNER and STARLIGHT as well as other projects of the extended AI research cluster.

In the context of pop AI results this first webinar illustrated the work carried out within two different tasks: the taxonomy of AI functionalities in the security domain (led by NCSRD), and the mapping of on controversy ecosystem of AI application in civil security domain (led by Trilateral Research Enterprise).

The first part of the webinar and after a short pop AI project overview by the Coordinator, provided an overview of the use of AI in different areas of application in civil security in Europe namely, i) crime prevention, ii) crime investigation, iii) cyber operations, iv) migration, asylum and border control, and v) administration of justice. Controversial cases were presented to discuss and identify the relevant legal, ethical, social and organisational issues, the potentials and the concerns of AI application in these areas as well as the stakeholders involved from the development and employment to the policy-making of the technologies; including civil society organizations, local and national authorities, MEPs. The discussion of the findings and among the partners showed that there is a need for multi-disciplinary collaboration to address issues that emerge from diverse levels; technological limitations (i.e. poor design decisions, biases in datasets), problematic employment of technology (i.e. lack of training), lack of policies and transparency at an organizational level and lack of unified and harmonized legal frameworks which the Artificial Intelligence Act aims to cover. This exercise led to the mapping of the AI innovation in civil security controversy ecosystem allowing the identification and inclusion of stakeholders beyond innovators and technologists.

The second part of the webinar explained the theoretical approach for building a taxonomy on AI-related LEAs functionalities. This activity is of outmost importance to guide upcoming activities in the project (e.g. the definition of a practical Ethics toolbox for the use of AI by LEAs, as well as the delivery of roadmaps and future scenarios) but also to serve as a common ground to align discussions and work with the sibling projects. The proposed approach is to catalogue the AI technologies based on their functionalities (e.g. recognition, communication, data-analytics, surveillance) and, for each of them, identify the related ethical and legal issues, technological maturity level and relation with other functionalities.

In the third session, the floor was given to the representatives of sibling projects ALIGNER and STARLIGHT, which address topics of identified synergy and complementarity and are engaged in defining research roadmaps and outlining possible pilot or future scenarios for the use of AI technologies in LEAs activities.

Within the ALIGNER project, work began in October 2021 on the development of an ‘archetypical scenario’ and the associated framework for the forthcoming scenario narratives. This led to the recognition that AI technology in the context of policing and law enforcement has a dual nature, where it is both a crime and security threat and yet it is also utilised in the service of police and law enforcement agencies (P&LEA). From the perspective of ALIGNER scenarios, there is a need for them to reflect this duality and hence ALIGNER will produce ‘AI crime and security threat scenarios’ and ‘P&LEA AI use-case scenarios’. A framework to support the scenario narratives for both of these types has been developed from two typologies based on the large (and increasing) number of examples and case studies as they come to light. It is envisaged that this process of discovery will continue throughout the life of the project, generating insights and modifications that can in turn be incorporated into the iterations of the ALIGNER research roadmap document.

During ALIGNER session, there were a lot of synergies identified with pop AI. Both look at ethical aspects of the use of AI by law enforcement, both will develop scenarios for the use of AI by law enforcement agencies and police, and both will categorize AI technologies. Both projects have engaged with each other to find the specific synergies within these areas and explore in more detail how to leverage each other’s results. For example, while the technology watch approach by ALIGNER looks in detail at a limited selection of relevant AI technologies, pop AI will provide an exhaustive taxonomy mapping of AI functionalities. These approaches can and will build on each other. Similarly, ALIGNER the taxonomy of AI support crime might be relevant for pop AI. Although, the initial version of the ALIGNER taxonomy is only planned for release in September 2023, timeline discrepancies are already well identified and early engagement is planned between the projects.

Regarding the use of scenarios, the workshop has made clear that the technological and ‘business’ aspects of AI technologies need to be assessed in a cross-disciplinary approach, including the societal implications of AI (mis)use. This is identified as another area of exchange between pop AI and ALIGNER.

The workshop concluded with the STARLIGHT session. The project’s main ambitions include: (i) implementing human-centric AI based solutions with a coherent data strategy for the safety and security of our society and (ii) Building a community that can promote a human-centric approach to AI for security that is: responsible, explainable, trustworthy and accountable.

Early draft of Use Cases was presented and an alignment discussion within the cluster of SU-AI projects took place. The following tasks of the project were identified and discussed in the context of synergy with pop AI: Privacy & Security by design – Data Handling Support and Comparative Study on Data Access; Ethics by Design – Multidisciplinary Perspectives on Algorithmic Bias; LEAs Acceptance: Ethical and Socio-Economic Aspects; Fundamental Rights, Law and Security Research; Impact Assessment Cycle. Finally, STARLIGHT explained the project’s Ethical and Legal Observatory (ELO) platform and a timeline was discussed for follow-up actions and participation to the next ELO meeting.

The webinar was conducted in the spirit of fruitful cooperation and allowed gathering food for thought for pop AI partners and external experts while showing opportunities for further joint activities with the other sibling projects and their networks.

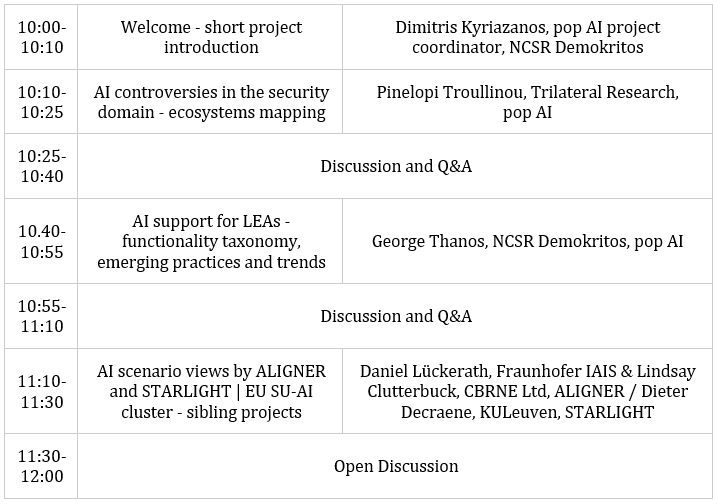

AGENDA

Summary compiled by pop AI [Paola Fratantoni, Dimitris Kyriazanos and Pinelopi Troullinou] with contributions from ALIGNER (Lindsay Clutterbuck and Daniel Lückerath) and STARLIGHT (Dieter Decraene)

pop AI, ALIGNER and STARLIGHT are funded by the Horizon 2020 Framework Programme of the European Union for Research and Innovation. GA number: 101022001, 101020574 and 101021797 respectively.